five5quare

Beautiful music and hypnotic animation are at your fingertips. with five5quare, if you can bend your fingers, you can play a song!

Team and role

Arnav Wagh - Visual animation, Code, Prototyping

Max Horwich - Audio, Code

Amena Hayat - Hardware, Code

Max Horwich - Audio, Code

Amena Hayat - Hardware, Code

Tools and Technology

P5.js

Tone.js

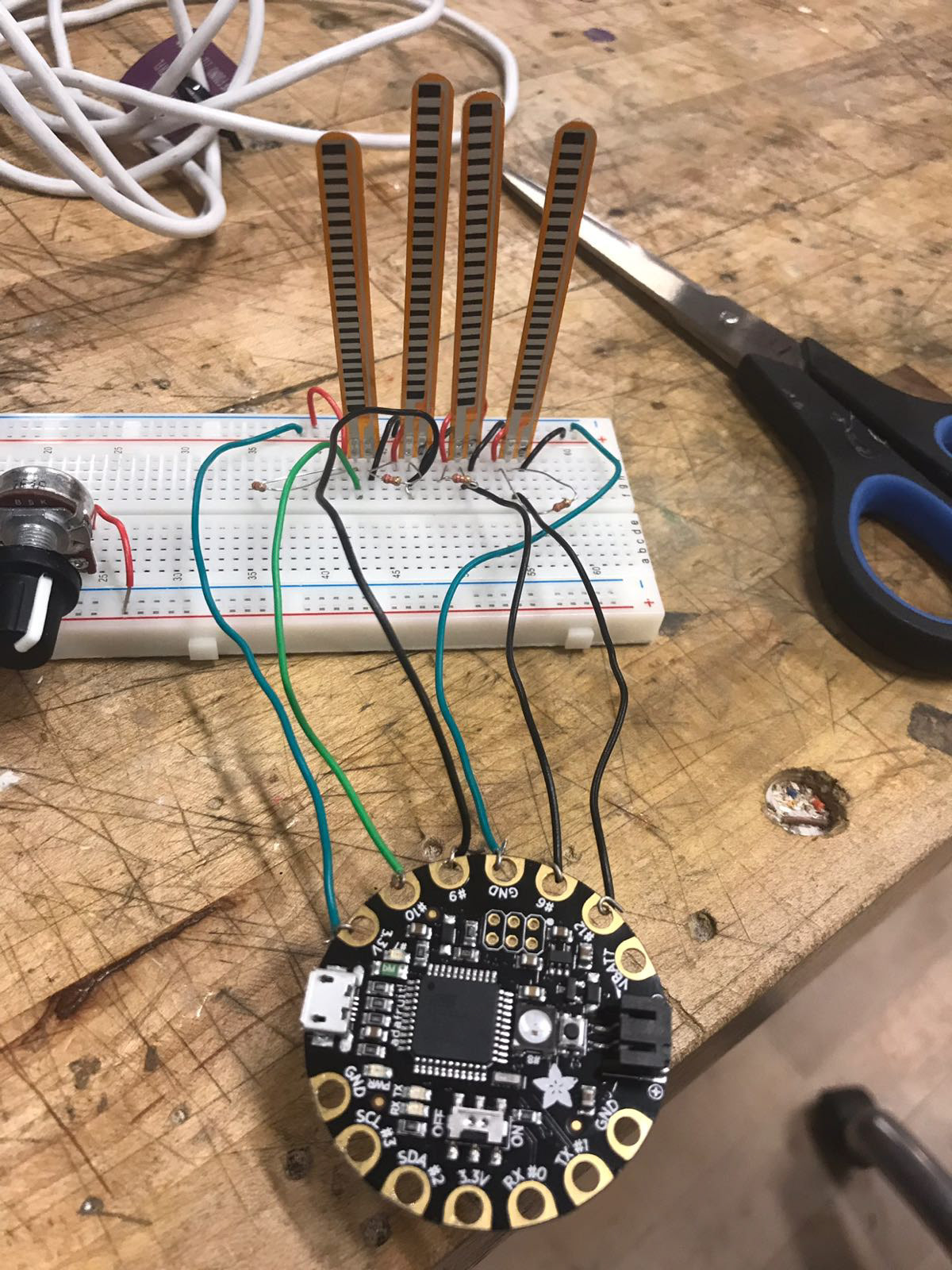

Breadboard Electronics and Adafruit Flora

Tone.js

Breadboard Electronics and Adafruit Flora

The strength of this project is in the collaboration. I was sure I wanted to make some form of a visual musical interface or instrument for non-musicians, but without any understanding of music theory, I needed to partner with someone who does. I met two students who had similar ideas about using a glove-based gestural interface. We got together and spent a week ideating on possible interfaces with the given controls.

Being the primary target user without any formal knowledge of music, I had to think of suitable gestural and visual metaphors to model the interface afterward. I wanted to strike a balance between creating enough structure to constrain the musical output so that the music doesn’t sound random and incoherent, and some freestyle component so that the user also has a sense of spontaneity in playing melodies.

What emerged was a holistic and intuitive musical interface that combines a non-traditional graphic notation with gesture-based wearable controls that are simple and intuitive enough for a non-musician to use comfortably. It is halfway between a traditional step sequencer and Kandinsky from the Chrome Music Lab Experiments. So the step sequencer is where you would loop different instruments and use the drawing program and glove in combination to freestyle with the music.

Process

We decided to use Tone.js library as a primary source for all the sound synthesis. The most challenging yet exciting part of this process was the convergence and divergence of each of our work processes within the team. It was a bit of a catch-22 situation where the visuals and animations were dependent on the sound and the sound was dependent on the visuals. So each of us would go exploring our own ways and build on each other's work, step-by-step.

As an early POC of the idea, I put together an early visualization of the interface as something to aim towards.

Each color is a separate channel playing a different sound and the shapes dictate the texture and timbre of the sound. The position of the shape on the canvas dictates its pitch and finally, the opacity is the amount of volume.

Next, I made a POC of a basic drawing program, with two different oscillators mapped to the x, and y axes.

sketch – http://alpha.editor.p5js.org/Arnav/sketches/B1D0JY1xz

sketch – http://alpha.editor.p5js.org/Arnav/sketches/B1D0JY1xz

POC of Web interface +

Serial control

This one uses a potentiometer in place of flex sensors.

This one uses a potentiometer in place of flex sensors.

By this time the team was making a lot of progress with understanding and creating sounds with Tone.js. To familiarize me with the library and test the serial interaction with the sketch, I put together a basic generative music program with sliders to control the tempo with frame rate. As the flex sensor was giving me erratic values in p5, I mapped the slider values to a potentiometer (to simulate the flex sensors on the glove)

sketch – http://alpha.editor.p5js.org/Arnav/sketches/Sy5pAHIlz

sketch – http://alpha.editor.p5js.org/Arnav/sketches/Sy5pAHIlz

I wanted to create different tempo rates for different sound families and control that with a Pot. Changing the tempo seemed to be a good way to easily and quickly add a rich level of expression to the music being created.

For the tangible control, we narrowed down on a gestural interface using 4 flex sensors (controlled by Adafruit Flora board) sown onto the glove for 4 fingers and either a light sensor or an accelerometer at the palm to modulate a certain sound parameter.

I started figuring out the different sound analyzers in the Tone.js library and used that data to animate each shape.

Circle / Kick

Currently, I am using the tone.progress function to get the value of the kick loop as it progresses through time. The returned values are mapped to the circle in reverse, so it swells to its peak as it hits the kick sound.

Currently, I am using the tone.progress function to get the value of the kick loop as it progresses through time. The returned values are mapped to the circle in reverse, so it swells to its peak as it hits the kick sound.

Square / Bass

I am using a waveform analyzer on the bass. The waveform analyzer returns values as a fat32array. It took me nearly half a day to get this working. At first, I tried mapping the array values to a single line but instead of getting a granular visualization of the waveform, I was only able to move a few points on the line, leading to much frustration! I ran the bass sound through a distortion filter as a naive attempt at getting a more pronounced waveform. Eventually, I used the array values to make the square vibrate to its waveform. I played around with the array size and skipped a few pixels till I achieved a satisfactory amount of vibration.

I am using a waveform analyzer on the bass. The waveform analyzer returns values as a fat32array. It took me nearly half a day to get this working. At first, I tried mapping the array values to a single line but instead of getting a granular visualization of the waveform, I was only able to move a few points on the line, leading to much frustration! I ran the bass sound through a distortion filter as a naive attempt at getting a more pronounced waveform. Eventually, I used the array values to make the square vibrate to its waveform. I played around with the array size and skipped a few pixels till I achieved a satisfactory amount of vibration.

Snare / Triangle

I used the waveform again to slightly shake the triangle as it hit the snare.

I used the waveform again to slightly shake the triangle as it hit the snare.

Dynamic dots / Piano

The piano chords are very rich in sound & the animations had to do justice to the chords. The piano loop has 4 sets of chords, each with 3 notes. I wanted a circle to fade in, each time a chord was played from any given loop. It was very challenging to achieve this as the sound/time component was part of the Tone.js library. Finally, with help from Shivanku, I was able to sync the animation with the chords. I used the Tone.progress function as a global timer and broke it down into three phases. I then initiated a different circle animation to play at each phase.

The piano chords are very rich in sound & the animations had to do justice to the chords. The piano loop has 4 sets of chords, each with 3 notes. I wanted a circle to fade in, each time a chord was played from any given loop. It was very challenging to achieve this as the sound/time component was part of the Tone.js library. Finally, with help from Shivanku, I was able to sync the animation with the chords. I used the Tone.progress function as a global timer and broke it down into three phases. I then initiated a different circle animation to play at each phase.

Paint/ lead

The paint program plays different notes as you paint across the screen. The notes are mapped to different zones on the canvas.

The paint program plays different notes as you paint across the screen. The notes are mapped to different zones on the canvas.

In parallel, Amena was making progress on the glove. The glove uses a simple circuit of 4 flex sensors wired to each of the pins of the Flora board.